When OpenAI CEO Sam Altman steps onto the stage, perhaps many people will envision the 2007 Jobs, dreaming back to the beginning of the last technological era.

Even Sam Altman himself did not expect that in just a short year, solely through word of mouth, OpenAI has become one of the most widely used artificial intelligence platforms worldwide. ChatGPT has also become a reigning presence in the global AI field.

In the past year, approximately 2 million developers have developed based on OpenAI’s API (Application Programming Interface). OpenAI’s corporate clients include 92% of the Fortune 500 companies, and ChatGPT has attracted over a hundred million users. They are actively engaged every week with this epoch-making AI product, with ChatGPT becoming an indispensable AI assistant in their work and lives.

An undeniable fact is that OpenAI is leading the entire world forward.

On November 7th, at 2 am Beijing time, the OpenAI DevDay conference officially began. This is the first developer day since the release of ChatGPT in November 2022, igniting a global AI craze. It is also one of the most important conferences since GPT-4, enough to shake the global tech community.

Nowadays, there are fewer and fewer companies that can excite people. Even the annual “Tech Spring Festival” Apple event is starting to disappoint. While lamenting the letdown of Apple, followers are pinning their hopes on this tech newcomer. In the midst of fervent slogans like “Trump card,” “Crush,” and “The moment of the new iPhone,” they eagerly anticipate GPT once again setting the internet ablaze.

So, has the new singularity truly arrived?

1. GPT-4 Turbo, not dazzling, but powerful enough

Before the official start of the conference, many awaited the arrival of the next ‘iPhone moment,’ especially AI professionals. Yet, their emotions were incredibly complex, intertwined with anticipation and fear. They anticipated the arrival of new technology and an infinite future brought by AI leaders, yet feared being directly surpassed by OpenAI’s formidable strength.

However, OpenAI took an unconventional path this time. Amid various speculations, many predicted that OpenAI would unveil a new killer application at this developer conference, perhaps GPT-5, with performance capable of outclassing all current large model products, maybe even dethroning GPT-4.

But OpenAI did not. A new model product was inevitable, named the more stylish GPT-4 Turbo. Its main updates include six aspects: context length, control methods, model knowledge content updates, multimodal input-output, model customization, and higher speed limits. Additionally, new features like copyright protection were introduced.

In essence, this is a comprehensive upgrade to GPT-4. Firstly, there’s an increase in context length, allowing users to engage in longer conversations with GPT-4 Turbo compared to previous versions. The previous maximum context length for GPT was 32k, with daily use typically limited to 8k, restricting instruction outputs and deep conversations. Now, OpenAI has raised the limit to 128K tokens, 16 times the original length. To put it into perspective, it’s roughly the content volume of a 300-page book. Imagine having a chat as long as Jules Verne’s ‘Twenty Thousand Leagues Under the Sea’ for just a few dollars. From now on, getting GPT to help with writing web content is no longer a problem (at least in terms of length, aesthetics may vary).

On the other hand, changes are reflected in fine-tuning details and model control. Simply put, through new patterns like JSON, you can better control GPT, getting the answers you want while calling more functions to make GPT’s responses and answers more stable. OpenAI will also offer more model fine-tuning services primarily aimed at individual enterprises, providing higher performance and more professional GPT products for a fee.

The rest are upgrades that could be anticipated. The knowledge base update goes without saying. Since the release of ChatGPT, external knowledge content updates have been criticized. For instance, if you asked about events in 2022, it could only tell you information up to 2021. This time, OpenAI finally updated the knowledge content’s time frame from 2021 to April 2023, although some lag still exists, GPT-4 Turbo is finally a bit more ‘in vogue.’

Multimodality is inevitable. GPT-4 Turbo integrates OpenAI’s existing visual, speech, and other model products, allowing future implementations of graphic-to-graphic and speech input, even providing developers with six preset voice options. This reminds me of the recent hit ‘Oh No! I’m Surrounded by Beauties!’

Regarding the speed limits for use, OpenAI provides GPT-4 users with a doubled ‘surfing’ experience. If that’s not enough, through your API account, you can pay to apply for an increased speed limit, allowing GPT to take off further.

However, for many observers, these updates have not met expectations. GPT-4 arrived as a ‘brand-new version’ but failed to impress.

“In the context of many people discussing the increased context length this time, whether it’s competitors like Anthropic, Claude, or even domestic large models, they have been able to handle inputs of tens of thousands or even hundreds of thousands of words. The advantage of this upgrade for GPT is not significant,” said AI practitioner and GPT user Climbing to the Hedgehog Community. In his view, this version of GPT-4 Turbo is not surprising; instead, OpenAI seems to be doing something routine and mediocre. “Many large model developers are already doing these multimodal functions. It’s not surprising at all. What I’m most looking forward to is the product’s intelligence.”

Similar sentiments abound online. The previous upgrade of GPT-4 Plus left many calling GPT-4 ‘dull.’ Many users were hoping for GPT-5, but OpenAI offered GPT-4 Turbo. While the upgrade can enhance the user experience to some extent, whether there is a significant improvement in ‘intelligence’ still needs further verification.

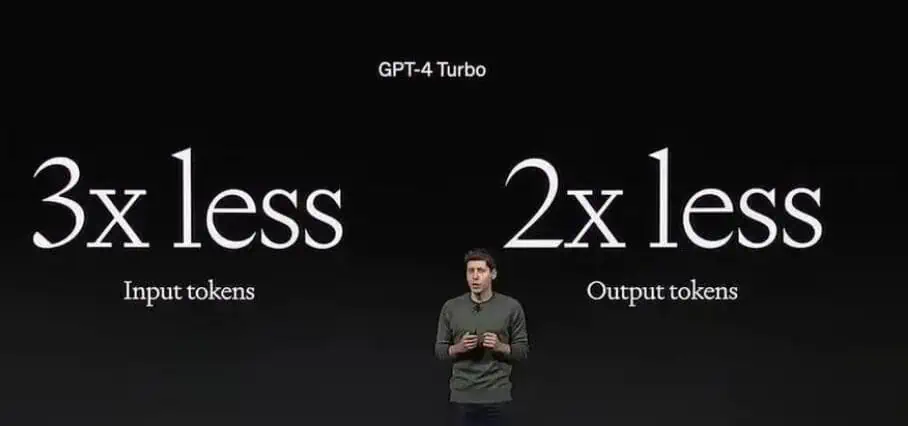

This time, OpenAI did not elevate the intelligence of GPT to the next level but chose to fill in the ‘shortcomings.’ In other words, attract more users through product experience upgrades. A direct example is OpenAI deciding to significantly reduce the API system’s overall price. The input price for GPT-4 Turbo has been reduced by three times, with the new price being one cent per thousand tokens, and the output price has been halved, with the new price being three cents per thousand tokens. Sam Altman announced at the conference that GPT-4 Turbo’s overall rate is more than 2.75 times cheaper than GPT-4.

Many liken OpenAI to the next Apple, but they differ on whether to reduce prices. AI is still niche, and OpenAI understands the importance of expanding the user base, even under the pressure of burning money. The purpose of the first half of the entire conference becomes apparent. Sam Altman and OpenAI are determined to take the inclusive route, crushing all competitors with a bold approach:

As the world’s top large model product, OpenAI has not only comprehensively upgraded its product, reducing usage costs, but also addressed users’ copyright concerns (copyright shield). Countless words converge into one sentence, ‘Come and be our user!’

2. Give a man a fish, and you feed him for a day. Teach a man to fish, and you feed him for a lifetime

Creating inclusive products not only validates OpenAI’s proposed Scale Laws (where performance synchronously improves with increased model and data computing scales) but also serves as a market share enhancement strategy. The additional actions during the conference reveal OpenAI’s ambitions.

Apart from launching new model products, the release of GPTs and Assistants API is even more exciting. In simple terms, OpenAI aims to construct a vast ecosystem of large models. Through this ecosystem, users can access any AI application product they desire and become developers of AI Agents using OpenAI’s API system.

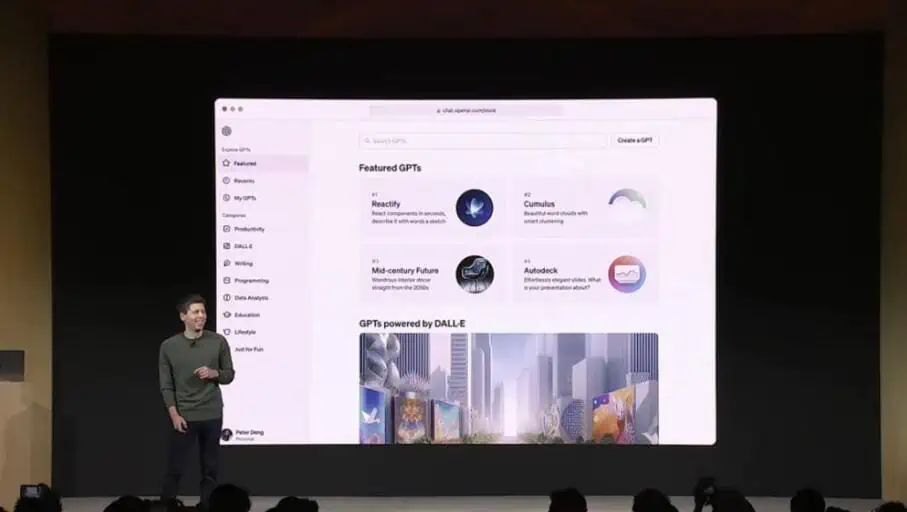

Firstly, GPTs are customized versions of ChatGPT created for specific purposes, using GPT products as a technical base to derive various GPT variations. In May 2023, OpenAI introduced the plugin system for GPT products, launching a batch of large model applications targeting various verticals and professional uses. This laid the foundation for GPTs. This time, OpenAI chose to separate these applications, no longer attaching them as plugins but making them independent apps, aggregated through the GPT Store.

To draw a comparison, GPTs are like the apps of the mobile internet era, and the GPT Store is the App Store. However, all these apps are based on OpenAI’s large language models, representing products of the AI era.

Currently, the GPT Store has featured several applications, including official development visual models like DALL·E, Game Time for interpreting chess and card games, and Gen Z memes for understanding the latest trends and popular memes of Generation Z. During the conference, OpenAI’s staff demonstrated using the Zapier app, arranging personal schedules and engaging in real-time communication through AI.

Most importantly, everyone can create their GPT. On-site, Sam Altman opened GPT Builder and, through a few simple conversations, created a GPT providing consultation services for business founders. By uploading speech content, this GPT became a professional “startup advisor” in less than three minutes.

No need for coding, no complex UI building—just conversation, knowledge base uploading, and setting some action instructions. Developing an intelligent assistant in three minutes is a fairy tale achievable only in the AI era.

Users can use their personally developed GPT privately, offer it to businesses in need, or make it public through the GPT Store, earning a profit share given by OpenAI. Users aren’t just spending money on products; they can also earn through GPT.

For individual developers, the applications they can develop remain relatively simple. Still, for professional development teams and businesses, the cost issue has been somewhat resolved.

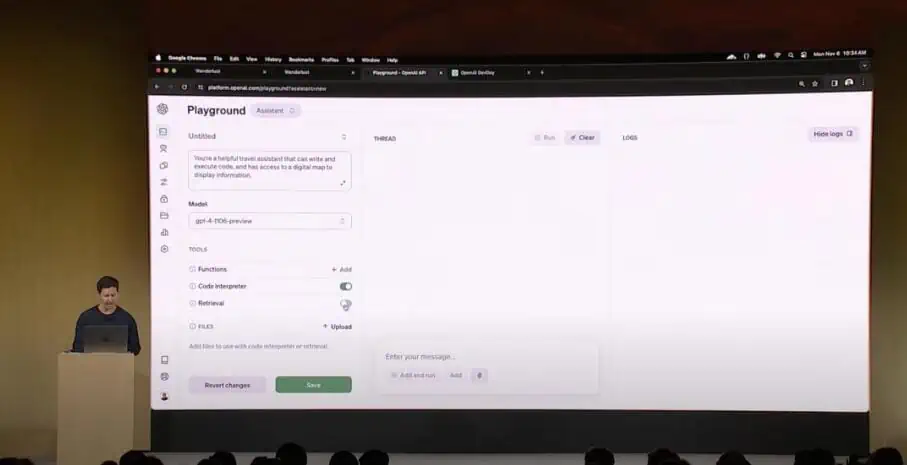

The Assistants API is the tool for achieving “one-click creation of GPT applications.” In the past, developing an agent was a complex process, requiring a professional team to complete extensive and intricate setup work. With the Assistants API, developers can create a “development assistant” with specific instructions, additional knowledge, and the ability to call various models and tools, all without a single line of code.

In a zero-code situation, you only need to input instructions and fine-tune them to create a high-quality AI application or agent.

In straightforward terms, OpenAI is encapsulating the “development process” through AI, ushering in an era where creating applications is as simple as typing or saying a few words. If the Assistants API is eventually opened to all users, everyone can become a product manager, with AI handling the programming.

From OpenAI and developers’ perspectives, this is undoubtedly an act of “expanding horizons,” benefiting all users and collectively building an AI application ecosystem based on OpenAI, promising new prosperity. However, for many in the industry, this is undoubtedly a “slaughter.”

As early as the YC Alumni Sharing in October, Sam Altman had warned so-called “AI” companies that rely on shell ChatGPT, stating that as OpenAI’s model products gradually broaden their scope, these companies, in increasingly limited survival space, will inevitably face extinction.

This conference confirms that it was Sam Altman’s “forewarning.” GPT not only aims to defeat everyone in user experience but also to establish barriers in the vertical application and developer competition areas through GPTs and Assistants API. The phrase “Those who learn from me prosper; those who oppose me perish” is no longer a universal truth because, under OpenAI’s offensive, competitors must face the “threat of extinction.“

Some observers fail to comprehend the efforts OpenAI is making in these areas. In their view, many actions are merely for market dominance. “Now many of OpenAI’s actions are entirely driven by business logic, such as GPT Store. Apart from building an ecosystem and squeezing competitors’ survival space, such extensive investments are not necessary.”

AI industry observer Blue Qi believes that OpenAI is gradually becoming a traditional tech company, where business competition has become the most important means apart from research and development. “Many old users are not quite satisfied with GPT-4’s performance. Perhaps they should continue to focus on the intelligent development of large language models. It’s evident that current development-type products cannot handle high-quality application development, leading to an influx of low-quality applications.”

For such a unicorn company with obvious first-mover advantages, moving towards scalability and even monopolization might be natural. However, the actual implementation of applications is the most crucial step.

Currently, one of OpenAI’s key research focuses is to make AI increasingly practical. This involves not only driving the achievement of AGI (Artificial General Intelligence) through scalability but also addressing real-world operational issues. “So, I understand, but there’s always an unconscious hope that they move faster.

3. OpenAI’s Dilemma

Before the official release of ChatGPT, OpenAI was indeed sprinting on a track with few competitors.

In October, at the YC Alumni Sharing event, Sam Altman shared a small story about OpenAI. He admitted that, initially, large language models were not the organization’s main research focus. They experimented with research in various areas such as robotics and game AI. However, Alec Radford, a graduate of Franklin W. Olin College of Engineering, consistently focused on the direction of large language models.

Seven years later, the area he researched ultimately shaped OpenAI, changing the direction of global technological development. The persistence of an undergraduate influenced the entire tech industry, constructing the foundation of OpenAI’s development. The ivory tower-like innovation, often considered one of the reasons for OpenAI’s success, is embodied in this fascinating story.

Despite substantial sponsorship from entrepreneurs like Musk, OpenAI’s significant highlight was its reluctance to engage in the capital game. Instead, it opted for dedicated research. Sam Altman firmly believed that generating high returns was not the primary goal of investing in startup companies. The most desired outcome was to drive innovation that could overturn everything.

However, with the emergence of ChatGPT, everything is rapidly changing. OpenAI’s opponents have become everyone, and research is no longer the sole concern.

Reflecting on the past year, OpenAI has gained more and faced more choices.

As a non-publicly traded company, its valuation has reached a staggering $90 billion. However, like other unicorns, OpenAI is now facing the most basic operational issues.

GPT is a key that leads OpenAI into a vast world but also brings the heaviest burden—reality—to the company. The ultimate goal of being a “tech nouveau riche” is not just about being “nouveau riche”; stabilizing its position and consistently standing at the pinnacle is the real challenge. After all, ChatGPT has not truly achieved “dominance over all.”

Therefore, OpenAI needs to make continuous choices, pushing for commercialization, spreading the high costs of research and computing through scale, and building a robust AI application ecosystem. These are the choices made in this context.

On the other hand, limitations in computing power, chips, and other areas continually prompt OpenAI to strategize anew. Despite having Microsoft’s support, OpenAI considers investment a crucial “trump card.” Currently, OpenAI has deployed $175 million to invest in the next generation of AI startups and has also nurtured a large number of tech startups through “technical equity.”

From a non-profit organization to a tech unicorn, a product company, and even a tech giant, in just one year, OpenAI is undergoing a drastic transformation. It can be affirmed that to reassure investors like Microsoft and stay competitive against giants like Google and Meta, making products commercially viable is crucial.

At this conference, Microsoft CEO Satya Nadella also appeared, and Sam Altman once again emphasized the relationship with Microsoft, stating, “We are deepening our collaboration with Microsoft.”

Before this, there were speculations and rumors about the collaboration’s nature, with some even suggesting that Microsoft’s AI business was threatened by OpenAI, and the collaboration might come to an end. The CEO’s presence this time seemed more like a “debunking” of such speculations. OpenAI needs these conversations to instill confidence in users and investors, although they are already quite confident.

Fortunately, OpenAI still maintains its ultimate goal of achieving AGI and consistently stays at the forefront. It is like a sharp sword, constantly forging ahead, while opponents are being cut down.

After the conference, a meme circulated widely. A startup founder invited to the Developer Day bluntly stated that Sam Altman had destroyed his startup, valued at $3 million, and he only received $500 in OpenAI API credits (a gift prepared for each developer at the event). Regardless of how competition between giants unfolds, startups in the AI application field undoubtedly face a nightmare.

On X, another user likened this conference to the infamous “Red Wedding” conspiracy in the TV series “Game of Thrones,” implying that OpenAI invited developers to witness the “hellish joke” of their shattered entrepreneurial dreams. Someone fed this tweet to GPT-4 Turbo, and it accurately summarized the tweet’s meaning.

A comment acted like a fable: the most marvelous thing is that the riddler of this riddle is the answer itself.

[…] OpenAI Conference Decides Fate of AI Startups! […]